GANs beyond generation: 7 alternative use cases

Hi everyone!

Like a lot of people who are following advances in AI I couldn’t skip recent progress in generative modeling, in particular great success of generative adversarial networks (GANs) in images generation. Look at these samples: they’re barely distinguishable from real photos!

The progress of face generation from 2014 to 2018 is also remarkable:

I am very excited by these results, but my inner sceptic is always doubting if they are really useful and broadly applicable. I was already opening this question in my Facebook note:

Basically I “complained” that with all the power of generative models we don’t really use them for something more practical than high-res faces or burgers generation. Of course, there are businesses, that can be based directly on image generation or style transfer (like character or level generation in gaming industry, style transfer from real photos to anime avatars), but I was looking for more areas where GANs and other generative models can be applied. I also would like to remember, that with generative models we can generate not only images, but text, sounds, voices, music, structured data like game levels or drug molecules, and there is amazing blog post with other generation-like applications, but here we will restrict us with examples where synthesis is not a main goal.

In this article I present 7 alternative use cases. With some of them I already have worked personally and can confirm their usefulness, some other are in research, but it doesn’t mean they’re not worth to give a try. All these examples of using generative models not necessarily for creation can be applied in different areas and for different data, since our main goal will be not generating something realistic, but exploiting inner knowledge of neural networks for new tasks.

1. Data augmentation

Maybe the most obvious application can be to train a model to generate new samples from our data to augment our dataset. How do we check if this augmentation really helped something? Well, there are two main strategies: we can train our model on “fake” data and check how well it performs on real samples. And the opposite: we train our model on real data to do some classification task and only after check how well it performs on generated data (GAN fans can recognize inception score here). If it works well in both cases — you can feel free to add samples from generative model to your real data and retrain it again — you should expect gain of performance. To make this approach even more powerful and flexible, check application #6.

NVIDIA showed amazing example of this approach in action: they used GANs to augment dataset of medical brain CT images with different diseases and showed that the classification performance using only classic data augmentation yielded 78.6% sensitivity and 88.4% specificity. By adding the synthetic data augmentation the results increased to 85.7% sensitivity and 92.4% specificity.

2. Privacy preserving

Data of a lot of companies can be secret(like financial data that makes money), confidential or sensitive (medical data that contains patients diagnoses). But sometimes we need to share it with third parties like consultants or researchers. If we want just share general idea about our data that includes the most important patterns, details and shapes of the objects we can use generative models directly like in previous paragraph to sample examples of our data to share with other people. This way we won’t share any exact confidential data, just something what looks exactly like it.

More difficult case is when we want to share data secretly. Of course, we have different encryption schemes, like homomorphic encryption, but they have known drawbacks like hiding 1MB of information in 10GB code. In 2016 Google opened a new research path on using GAN competitive framework for encryption problem, where two networks had to compete in creating the code and cracking it:

But the best point is not efficiency of the obtained code or “AI” buzzword in another area. We should remember that representations, obtained by neural networks very often still contain the most useful information about input data (some of them are even trained directly to have that information like autoencoders) and from this compressed data we still can do classification / regression / clustering or whatever we want. If we replace “compressed” with “encrypted” the idea is clear: this is amazing way to share data with third parties without showing anything about dataset. It’s much stronger than anonymization or even fake samples generation and may be a next big thing (with using blockchain of course, like already does Numerai)

3. Anomaly detection

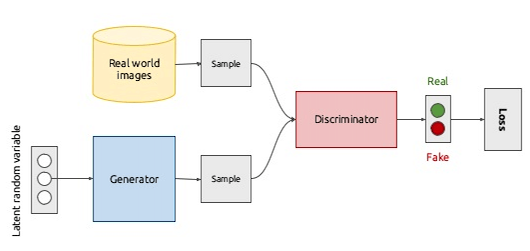

Main generative models like variational autoencoder (VAE) or GAN consist of two parts. VAE has encoder and decoder, where first one basically models the distribution and second reconstructs from it. GAN consists of generator and discriminator, where first models the distribution and second judges if it’s close to train data. As we can see, they’re pretty similar in some way — there is modeling and judging part (in VAE we can consider reconstructing as some kind of judgement). Modeling part supposed to learn data distribution. What will happen to judging part if we will give to it some sample not from training distribution? In case of well trained GAN discriminator will tell us 0, and reconstruction error of VAE will be higher than average one on training data. Here it comes: our unsupervised anomaly detector, easily trained and evaluated. And we can feed it with some steroids like statistical distances if we want (Wasserstein GAN). In this paper you can find example of GAN used for anomaly detection and here of an autoencoder. I also add my own rough sketch of an autoencoder based anomaly detector for time series written in Keras:

4. Discriminative modeling

All what deep learning really does is mapping input data to some space where it’s more easy separable or explainable by simple mathematical models like SVM or logistic regression. Generative models also do their own mapping, let’s start with VAEs. Autoencoders map input sample into some meaningful latent space and basically we can train some model straight over it. Does it make any sense? Is it different from just using encoder layers and train a model to do some classification directly? It is indeed. Latent space of autoencoder is complex non-linear dimension reduction and in case of variational autoencoder also a multivariate distribution, which can be much better starting initialization for training a discriminative model than some random initialization.

GANs are a bit more difficult to use for other tasks. They’re designed to generate samples from random seed and don’t expect expect any input. But we still can exploit them as classifiers at least in two ways. First one, already researched, is leveraging discriminator to perform classification of a generated sample into different classes alongside with just telling if it’s real or fake. We can expect from obtained classifier to be better regularized (since it has seen different kind of noises and perturbations of input data) and to have extra class for outliers / anomalies:

Second is unfairly forgotten approach to classification with Bayes’ theorem, where we model p(c_k|x) based on p(x|c_k) (exactly what conditional GAN does!) and priors p(x), p(c_k). Main question here is do really GANs learn the data distribution?, and it’s being discussed in some recent studies.

5. Domain adaptation

This is one of the most powerful, as for me. In practice we almost never have the same data source for training the models and running them in real world environment. In computer vision different light conditions, camera settings or weather can make even very accurate model useless. In NLP / speech analysis slang or accent can ruin performance of your model trained on “grammatically correct” language. In signal processing most probably you have totally different devices for capturing data to train models and production. But we also can notice that both of data “types” are very similar to each other. And we know machine learning models performing mapping from one condition to another preserving the main content, but changing details. Yes, I am taking about style transfer now, but for less creative purposes.

For example, if you’re dealing with an application that supposed to work on some kind of CCTV cameras, but you have trained your model on high resolution images, you can try to use GANs to preprocess images with de-noising and enhancing them. More radical example I can provide from signal processing area: there are a lot of datasets related to accelerometer data from mobile phones that describes different people activities. But what if you want to apply your models trained on phone data on the wristband? GANs can try to help you to translate different kinds of movements. In general, generative models that perform generation not from a noise, but some predefined prior can help you with domain adaptation, covariance shifts and other problems related to differences in data.

6. Data manipulation

We talked about style transfer in previous paragraph. What I don’t really like in it is the fact that it’s mapping function that works on the whole input and changes it all. What if I want just to change the nose on some photo? Or change the color of a car? Or replace some words in a speech without changing it completely? If we want to do this, we already assume, that our object can be described with some finite set of factors, for instance, a face is a combination of eyes, nose, hair, lips etc and these factors have their own attributes (color, size etc). What if we could map our pixels with a photo to some … where we can just adjust these factors and make nose bigger or smaller? There are some mathematical concepts that allow it: manifold hypothesis and disentangled representation. Good news for us is, that autoencoders and, probably, GANs allow us to model distribution in such a way so it’s a mixture of these factors.

7. Adversarial training

You might disagree with me adding the paragraph about attacks on machine learning models, but it has everything do to with generative models (adversarial attack algorithms are indeed very simple ones) and adversarial algorithms (since we have a model competing against another one). Maybe you are familiar with a concept of adversarial examples: small perturbations in model’s input (can be even one pixel in image) that cause to totally wrong performance. There are different ways to fight against them, and one of the most basic ones is called adversarial training: basically it’s about leveraging adversarial examples to build even more accurate models.

Without diving into details much it means that we still have two-player game: adversarial model (that simply makes input perturbations with some epsilon) that needs to maximize its influence and there is a classification model that needs to minimize its loss. Looks a lot like a GAN, but for different purposes: make a model more stable to adversarial attacks and improves its performance due to some kind of smart data augmentation and regularization.

Takeaways

In this article we’ve seen several examples of how GANs and some other generative models can be used for something else than generating nice images, melodies or short texts. Of course, their main long term goal will be generating real world objects conditioned by right situations, but today we can exploit their ability of modeling distributions and learning useful representations to improve our current AI pipelines, secure our data, find anomalies or adapt to more real world cases. I hope you’ll find them useful and will apply in your projects. Stay tuned!

P.S.

Follow me also in Facebook for AI articles that are too short for Medium, Instagram for personal stuff and Linkedin!